It’s been a weird year.

That’s hardly a rare sentiment for obvious reasons, but it was definitely an especially weird year for a small group of AI enthusiasts on a niche discord server. It’s been one year since the founding of EleutherAI (back then still called LibreAI). It both feels like so much longer and like it was just yesterday.

We are humbled and exhilarated by just how far our little hobby project has come. What started as a small little tinker project for a few bored nerds has grown into a vibrant community and open source project more successful than we could have ever imagined.

We worked hard, we struggled, but more than anything, we had fun, a lot of fun. And we thought it would be fun to share some of our history and best memes moments from this weird, weird year for your entertainment.

This post is a light-hearted trip down memory lane, and a look ahead for what might come next. We had a blast and we changed the world, we hope for the better.

From Humble Beginnings

The year is 2020, OpenAI has unveiled GPT-3, and the entire ML world is unimpressed. Well, not entirely... One small group of indomitable nerds still holds out against the scaling-deniers. And life is not easy for the symbolicist who garrison the fortified echochambers of Twitter...

One day, on Shawn Presser's Discord server, one man with a history of getting into trouble building large models saw a paper that almost made even larger model training seem possible.

And so he wrote:

And another replied:

And the rest was history!

Quickly, we began to hash out our plans for world domination TPU necromancy. Connor still had access to a generous amount of TPUs through TRC from his previous GPT-2 misadventures, and so a few dedicated nerds wanted to see how far we could get with that. In all honesty, we didn't actually expect to get very far, but it was the height of a pandemic and we didn't exactly have anything better to do.

After spamming liberally filling the text-AI related channels of our gracious hosts, we decided that we should strike out on our own. And so, on this very day one year ago, the "LibreAI" discord server was founded. We luckily wised up and picked a much cooler name shortly thereafter.

No, there has never been a space in EleutherAI

.

And so, EleutherAI was born!

The Tensorflow Days

Armed with access to a truly irresponsible amount of TPU computing power, we began our newly-christened GPT-Neo project to build our very own large language model.

But we were faced with a terrible price to be paid: We had to use TensorFlow to use our TPUs. Worse, the model sizes we were aiming at were so huge, we had to use an even more obscure library, Mesh TensorFlow, on top of it.

This was... not the easiest of things to do.

Progress was hard won, no thanks to our eternal greatest foe: The kafkaesque nightmare that is TensorFlow documentation.

Artist rendition of the hole that is TensorFlow documentation.

But our crack team of elite ML hackers was quickly making progress.

And we were sure that we would be able to start training our first models while only sacrificing a modicum of our mortal sanity.

And quickly, GPT-Neo took shape. A horrible, horrible shape, but shape nonetheless! We were well on our way to doing real ML research!

The Pile

What is training code without its data, though? Training large models needs a large collection of data. A big heap if one will. A significant mass. A sort of mound, even. And so was born...

Since OpenAI was being stingy with details on what data they had trained on (and definitely weren’t releasing a copy), we were, as usual, forced to do it ourselves. After a cursory glance into the abject horror that is average CommonCrawl data, we decided there had to be a better way, and so set about collecting our own large dataset, the Pile.

This went about as smoothly as you might imagine.

But, after months of hard work by many, many contributors at EleutherAI, on New Year's Day 2021, the Pile preprint went live.

First Results

The exciting news was quickly followed up (the next day!) by the announcement of our collaboration with CoreWeave, which pledged to provide us with GPUs to make this crazy project a reality. Released from the torment of having to work with TPUs without JAX (as JAX wasn't yet in quite as usable a state as it is today), work on our new codebase, GPT-NeoX began in earnest soon after.

While we didn’t know it at the time, a second significant development occurred that day: Phil Wang (@lucidrains) and Eric Alcaide (@hypnopump) began collaborating on a replication of AlphaFold2.

With our first real results delivered, outside observers started to take notice. The first mainstream article about EleutherAI was published by VentureBeat around this time.

With the Pile wrapped up, and GPT-NeoX still a while away, we put our TPUs to work on training our first large GPT-Neo models.

Representative example of what training Neo was like.

And then we... promptly forgot about them.

We saw the 1.3B and 2.7B GPT-Neo models as our proofs of concept, learning experiences on the road towards making models orders of magnitude larger. There were so many problems with the codebase that we were more focused on figuring out how to deal with those than releasing our trained models. But after several months of sitting in storage, we finally got around to releasing them on March 21st, 2021.

The Second Era of EleutherAI

GPT-Neo and GPT-J

This might seem quaint in retrospect, but we really didn't think people would care that much about our "small models."

Turns out, people did care.

This marked something of a new era of EleutherAI. We had already gotten a good amount of attention for the Pile, but now we had proven to the world we were the real deal. WIRED senior writer Will Knight published This AI Can Generate Convincing Text---and Anyone Can Use It, and other widely read articles follow. People were really excited to use our models!

In early April, we announced our exciting new GOOSE project. Information about the GOOSE project can be found at https://www.eleuther.ai/goose

GPT-NeoX was going well, we finally had code that could scale all the way to 175B, and beyond. We just needed the hardware to be ready. Unfortunately, we timed things perfectly to line up with a global GPU shortage which made things... challenging. We are continuing to work with CoreWeave to source the computational resources we need.

While waiting for the code and resources for GPT-NeoX, we decided to put the spare TPUs to use training a larger model. A new codebase, Mesh Transformer JAX was written for simplicity and efficiency in training medium sized models (<20B). After the customary TPU wrangling (although much shorter this time due to JAX having far less footguns than Mesh Tensorflow), a 6B parameter model was trained to completion and released.

An Explosion of BioML Research

The #alphafold channel grew quickly, attracting not only machine learning people but also biology and chemistry people interested in integrating new deep learning techniques into their work. By June, the Bio ML group had grown enough that it made sense to spin it off into its own server. While the main effort of replicating AlphaFold2 is still a work-in progress, several additional projects have flourished:

- Eric Alcide and Stella Biderman wrote a paper on faster algorithms for protein reconstruction, speeding up a mundane but important function by several orders of magnitude.

- Michael Pieler (MicPie) has been building a CLIP-style model for amino acid sequences.

- Stella Biderman is working with the authors of proteinBERT to scale their model and try out autoregressive modeling ("proteinGPT" perhaps).

At this point, it’s most accurate to say that we have a biological ML research group, headed up by Phil and Eric.

The Revival of #art

Since the early days of Eleuther, there always had been the humble, underutilized #art channel. While we had hoped it would be a place for ML artists of various kinds to exchange and discuss their creations, its initial purpose seemed mostly to be the dissemination of obscure German memes.

I assure you if you are a German ML researcher of a very specific age this is the funniest shit you've ever seen.

But this would change after the release of CLIP, and the rapid development of new techniques to generate astonishing ML art using it. #art now serves as the promised hub for creating and exchanging techniques, prompts, ideas and results, with the brilliant engineer-artists hard at work developing cool new models and prompt engineering techniques alike.

abandoned bitcoin mine as imagined by nmkd

The Alchemist by Thomas Wijck as imagined by Janus

A Character Design of A Angel Warrior with it's Sword of Divines , HDR , Rendered in Detailed Engine , Rendered in Super sharp Engine , Details as imagined by Kianne

a beautiful epic wondrous fantasy painting of the ocean as imagined by Katherine Crowson

Perhaps the most visually compelling development of #art is what became known as the "unreal engine trick." CLIP was trained on the internet, and the internet contains a lot of extremely high quality images that have a caption mentioning the Unreal Engine. CLIP noticed this, and we quickly realized that you could vastly improve the generates images by simply mentioning the Unreal Engine:

the angel of the sea. unreal engine as imagined by jbuster.

This is the first image generated with the unreal engine trick

.

The Underground Studio, #the-faraday-cage

#the-faraday-cage started as a channel to let anyone on our Discord use our early Neo models, mostly to laugh at how terrible they were. The channel has taken on (an insane) life of its own thanks to the Discord bot @BATbot McHorse maintained by BoneAmputee, which lets anyone on the server create art using CLIP. If #art is the display gallery, #the-faraday-cage is the underground art studio.

The bot is hooked up to some of our unused GPUs and now handles a staggering amount of requests, filling the Discord (and Twitter!) with spam art and creativity. At last count, it has produced over 35,000 images and survived two explosions in popularity after high-profile HackerNews and Twitter posts.

Looking Back, Looking Forward: The Future of EleutherAI

And so, we come to the current day. One year of this crazy cypherpunk-esque experiment, and with quite a lot to show for our efforts.

People may know us as "those guys building an open source GPT-3", but this was never our final ambition. Building large models is fun and gratifying, but at heart, we are researchers, and this is just an important first step to enable the kind of research we want to do.

EleutherAI has grown into a true collective. We host many different kinds of research in different ML subfields. But ultimately, we also believe in the power of AI, and the coming revolutions it will bring, and its dangers.

We believe that AI Alignment presents one of the, if not the most important problems to be working on in our lifetime. And this is at the core of what motivates us to be working on building and understanding these large language models. The state of our understanding of these powerful technologies is extremely primitive, and this does not bode well for our future safety and flourishing.

Safety research was always a primary motivation for our work at EleutherAI, as we explained in our blogpost Why Release A Large Language Model?. We think that access to large, pretrained models will enable large swathes of research that would not have been possible while such technologies are locked away behind corporate walls. For-profit entities have explicit incentives to downplay risks and discourage security probing. We want to help the wider safety and security communities access and study these new technologies.

We ourselves are working on doing just this as well. We have produced our first contribution to the wider alignment community in the form of our Alignment Forum post Thoughts on the Alignment Implications of Scaling, which summarizes many of the insights and ideas we have had so far.

And this is just a start, multiple groups inside EleutherAI are expanding their work in studying the safety and alignment of large, self-supervised models to human values.

Reflections

Do we even know what EleutherAI is after a year? EleutherAI has been many things to many people.

To my mind, EleutherAI is an AI hacking lab---and one unlike any other. In a year when many of us were suddenly cut off from in-person communication, our rag-tag assortment of researchers and hackers from around the globe somehow assembled on a Discord server and found ourselves trying to build the next big thing because wouldn't it be fun if we actually did it? There is a real excitement about advances in AI research, particularly with large-scale models, and a real drive to take ideas and put them to work quickly. The speed at which an idea can go from inspiration from the latest arXiv paper drop to full-scale experiment runs is astounding (there is literally a #speedrun channel). And while we do work on very important topics, a big part of EleutherAI is its more informal approach to research. For me, it's been a platform for more casual discussion, a replacement for the water cooler conversations where I can bounce off my silliest ideas and takes, poke fun at the field and ourselves, but very occasionally go "this, but unironically."

I first heard about EleutherAI in August, during a paper discussion in Yannic Kilcher’s discord server. Someone mentioned a group of people trying to replicate GPT-3, and being one of the salty people who hadn’t yet gotten the access to the OpenAI API, I was curious as to how it was even possible that a rag-tag discord server could even dare to have such an ambitious goal. Eventually I started observing from afar and began appreciating the knowledge base the entire server represented and thought ‘damn, this might actually be possible’. I also started focusing on AI safety for the first time in my life courtesy of our general focus on alignment, realizing the dangers a superhuman intelligence poses and how real it actually is as of today. It's been quite a ride for me: I found my first stable employment in no small part thanks to the friends I made at Eleuther, working on a project that will most certainly have a global impact and most importantly, vastly increasing my understanding of deep learning.

I was bored and stumbled in here from a Reddit post, then proceeded to spend the next month doing data scraping and processing. It turns out there is a lot of grind behind those nice papers on arXiv! That and ML methods often don’t generalize well to different datasets. Eleuther has been an amazing learning experience for me, and a great maintainer of sanity during the turbulent start of the 2020s.

Even as a lurker in the early days, I immediately got the sense of Oh, this is gonna be big with Eleuther (then LibreAI). There’s something about a collaboration between folks from across the world, most of whom have never met or who go by pseudonyms, doing AI research against all odds, that really stokes a feeling of frenetic excitement. Also, EleutherAI happens to host some of the smartest and strangest characters I know, who I have the pleasure of hanging out with in the #research channel and working on projects with. They say iron sharpens iron, and I think that applies: there’s a virtuous cycle here that somehow leads to high-caliber research and high-caliber memes alike. I’m truly excited to see where the next year takes all of us.

If nothing else, EleutherAI demonstrates how far initiative and drive can take you in today's world. EleutherAI is full of people who don't have traditional credentials, but that hasn't stopped us from doing awesome things. Many people, both established researchers and undergrads, come in and offer to help, but the people who stick around have nothing in common but an interest in pushing AI research forward. And that's pretty awesome.

I like to feel that my involvement was an accident---a lurker who got involved because they were the right man in the wrong place. What started slightly over five months ago as "it cannot hurt to stick around" snowballed into assisting the early development of GPT-NeoX, writing papers and maintaining a website. It has been among the most valuable experiences I have ever had, and I have had a tremendous amount of fun along the way.

In hindsight, EleutherAI filled a section of my life that I had been looking to fill for some time: a place where I could motivate myself to get work done that would truly make a difference in the world. I was loyal to the cause, and in return, it brought me experiences that I would have never imagined six months ago. We all may be a little crazy, but that is what is needed to tread new ground in a cutting-edge field. I cannot imagine what that new ground has in store, and I cannot wait to find out.

Like many history nerds, I have often wondered how cool it would have been if I could live in the time and place where the major intellectual ideas that would shape our world were formed. Imagine hanging out in the coffee houses of Europe with Enlightenment thinkers in the 1700s, or visiting XEROX PARC in the 1970s, or partying with the Paypal mafia in the 2000s... But what are the odds, right?

Then one day during the pandemic summer of 2020 AD, I found myself in this strange dream-like place, a community of international Machine Learning flaneurs who somehow became convinced that they could actually make history. At first, I thought it would just be a fun place to discuss new AI developments. But I soon discovered that yeah, these people are serious about their ambitions, and more thrillingly they actually would like to have me on board! As it turns out, the fact that Machine Learning engineers despise JavaScript (while still needing it) become my entry ticket to some of the coolest projects I ever worked on.

So, it has been a fun, surreal year—and yet something tells me we are just getting started.

I am a hacker in the original sense of the word: one who enjoys the intellectual challenge of creatively overcoming limitations of software systems to achieve novel and clever outcomes. When a friend introduced me to EleutherAI last summer, I was in a depressive funk. My friend hoped that the people and ideas of EAI would strike my interest and whimsy, and he couldn’t have been more correct.

EleutherAI is a special place. The passion, ambition, and bemusing arrogance of its members roused me back to life. It is a place where people don’t pause to ask “who am I to try to do this.” It is a place that follows the true spirit of the old French boast:

Mme, si c’est possible, c’est fait; si c’est impossible, cela se fera.If it's possible, madam, it's done; if it's impossible, it shall be done!

I often like to joke about how Eleuther is an outlier in that it has the most publications of any discord server---we’re “just” a bunch of volunteers working on stuff for fun, and yet we’ve gotten such a huge amount of stuff done in the past year. Eleuther is about more than just research, though. It’s also one of the most vibrant online communities that I’ve ever had the pleasure of being a part of, with constant lively discussions about scaling, alignment, and other ML topics (and memes, of course) with the most interesting cast of interlocutors. I can’t overstate how proud I am of what we’ve created so far ex nihilo. Our first year has been an incredible journey, but we’re only just getting started---here's to many more.

Maybe it is the realization that Schmidhuber has been right all along.

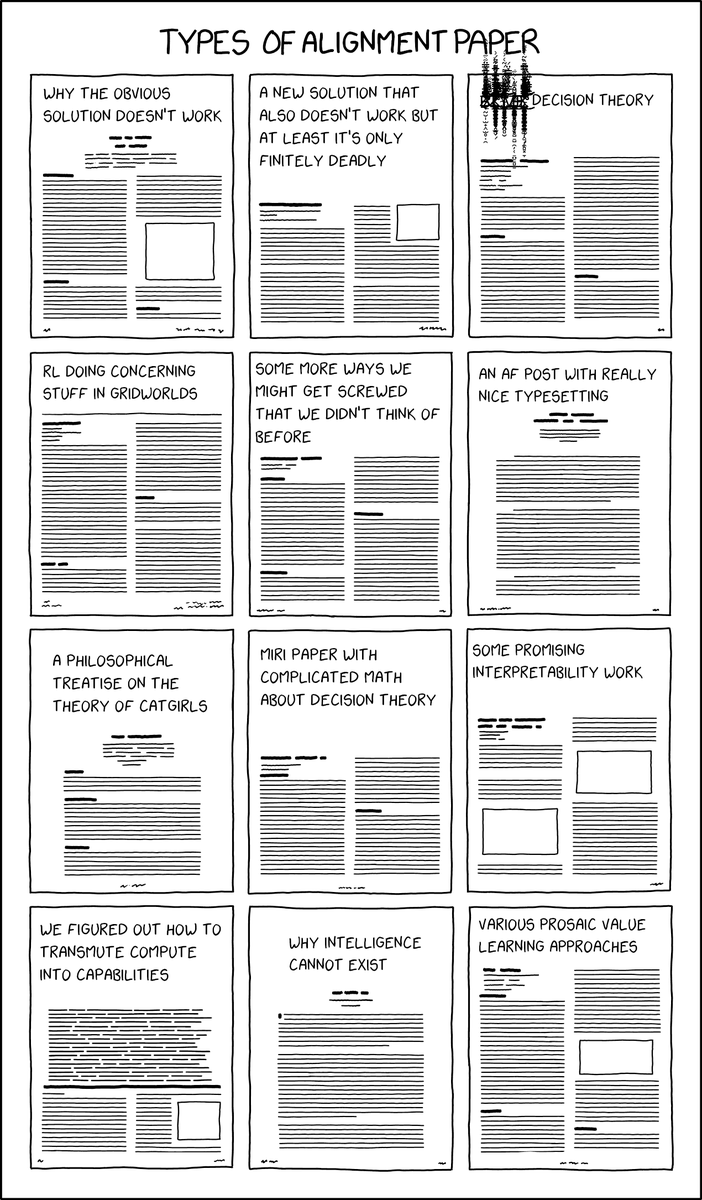

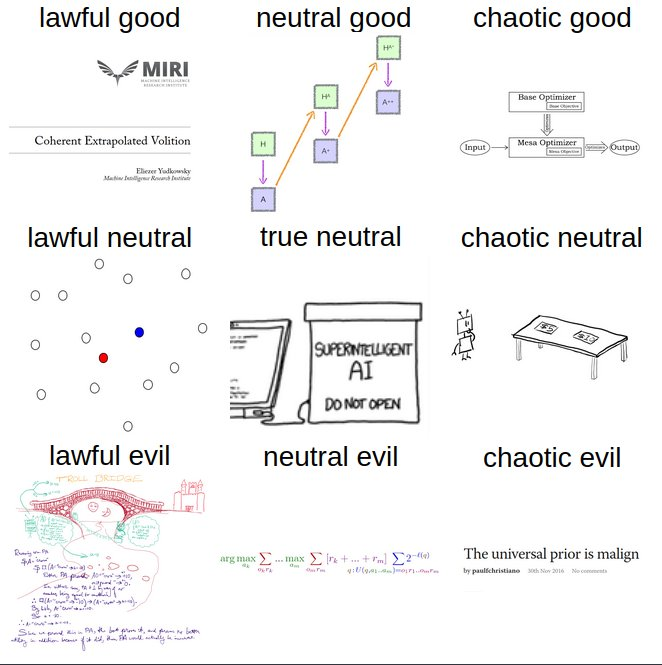

Or maybe it is the most advanced producer of alignment memes. (I promise these are hilarious if you are one of the three people that know what FDT is)

We’re not sure we know even today what EleutherAI really is, maybe we’ll figure it out this year.

What makes EleutherAI special? It’s hard to say, even for me. It’s lightning in a bottle. Right place, right time, right people. Nothing like this could have been planned. Living inside of something special feels remarkably not-special. I don’t know what the legacy of what we have accomplished here will ultimately be. But if EleutherAI has been one thing to me personally, it’s hope. A crack in the pervasive narrative of disempowerment. If a small group of smart, ambitious friends can make this much happen, what else is possible? History has a feeling of inevitability when viewed with the benefit of hindsight, but ultimately history is written by people. I think we’ve earned ourselves at least a curious footnote in history, but the story is far from over. To me, EleutherAI has been purpose, companionship and hope. Hope that the future isn’t yet carved in stone. Let us carve something beautiful.

It's been a weird year.

But for our little science project, it has also been an amazing year. All we can say is: Thank you, to everyone that made it possible.

On to an even better second year!